Black box AI: The challenge for healthcare adoption

Published on | 7 min read (Updated: )

After writing my last post, I wanted to revisit the black box phenomenon in AI models. AI is already embedded into many medical devices today, and the number of FDA-approved AI algorithms continues to increase. However, I think it is important to understand the distinction of the algorithms built into such devices. In this post, I aim to provide a technical lens on this topic.

A database of FDA-approved AI algorithms

I was able to dig up a 2020 article in Nature, which was the first I saw to categorize these devices by algorithm type in a database. It looks like it stopped updating in mid-2021, as it gave way to the FDA officially tracking this. I also recommend reading the Non-FDA publicly available resources listed on the FDA site for additional context.

I was interested in finding devices that used neural networks, given their rise in popularity in recent years. I settled on the first mention of an artificial neural network in the database. Eko Devices Inc. developed the Eko Analysis Software (EAS), which was approved by the FDA on January 15, 2020. According to their summary document, the tool is described as follows:

The Eko Analysis Software is a cloud-based software API that allows a user to upload synchronized ECG and heart sound/phonocardiogram (PCG) data for analysis. The software uses several methods to interpret the acquired signals including signal processing and artificial neural networks. The API can be electronically interfaced, and perform analysis with data transferred from multiple mobile or computer based applications.

Discriminative vs. Generative models

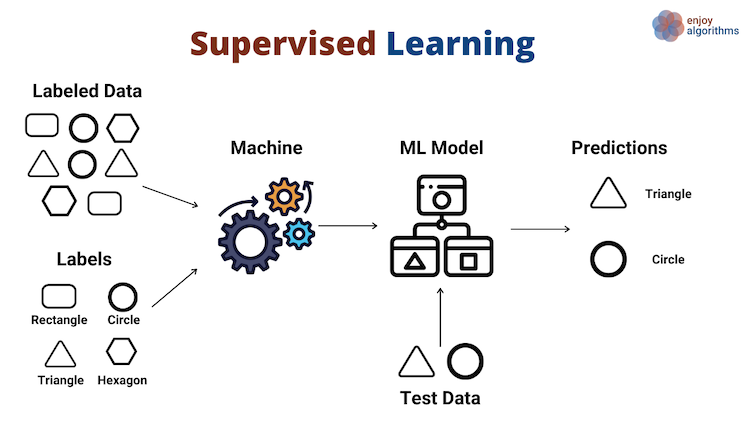

To better understand the significance of neural networks, we should know where they sit on the spectrum of discriminative vs. generative models. First, let’s review the main approaches to perform machine learning tasks: supervised and unsupervised learning.

At a high level, supervised learning uses labeled inputs (x) and outputs (y) to fit a model. Here, the machine algorithm estimates, or learns, a function that best relates the input (“labeled data”) to output (“labels”):

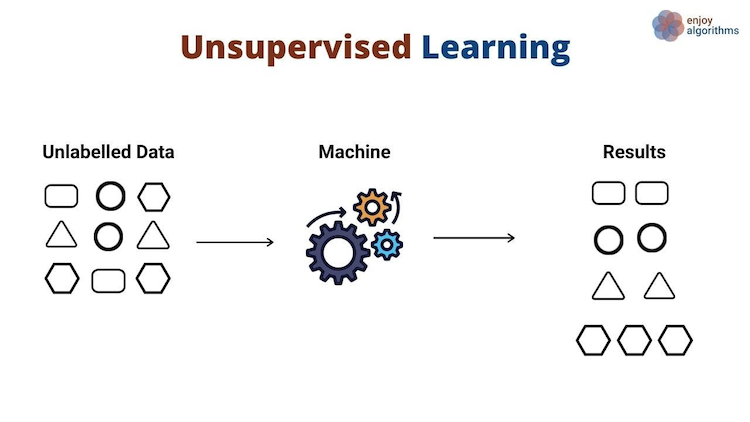

In contrast, unsupervised learning has no labeled inputs nor outputs. Here, the machine learning algorithm estimates a function that finds similarity among the input samples, and groups them based on that similarity as the output:

Credit: Kumar, R. Supervised, Unsupervised and Semi-supervised Learning with Real-life Usecase. EnjoyAlgorithms.

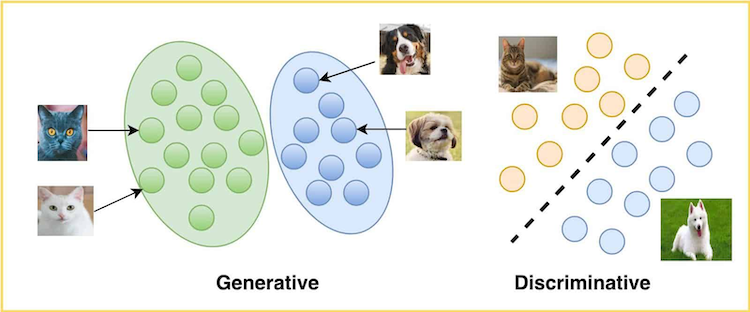

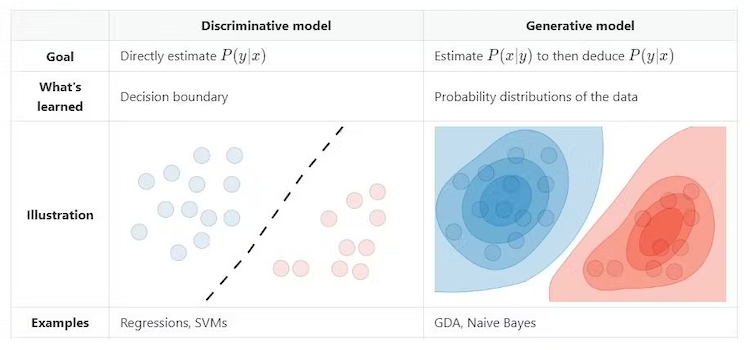

The models that fall under each approach can be generally categorized as discriminative or generative. By discriminative, the model aims to separate data points into different classes and learn the decision boundaries. By generative, the model aims to generate new data points similar to the data it was trained on:

Credit: LearnOpenCV Team. Generative and Discriminative Models. LearnOpenCV (May 2021).

Mathematically, we typically see discriminative models used in supervised learning. The goal is to estimate the conditional probability of the output (y) given the input (x), or the likelihood of an event occuring given that another event has occurred. Generative models are typically used in unsupervised learning. The goal is to estimate the joint probability of both the input (x) and output (y), or the likelihood that two events will occur at the same time. New data points can be generated by sampling from the underlying probability distribution:

Credit: Amidi, S and Amidi, A. CS 229 - Machine Learning. Stanford.

While neural networks are typically associated with unsupervised learning, the lines are somewhat blurred as discussed in some forums. Thus, I would say they can be either discriminative or generative models, based on the specific use case. For example, neural networks can be used for image classification (convolutional neural networks) or for generating new data (transformers).

As for the Eko Analysis System, the underlying neural networks are discriminative models (classification). The summary document states the ECG recordings are classified as either Normal or Atrial Fibrillation. No new data is being generated. It is also important to note that the FDA has not cleared any generative AI devices to-date.

Black box AI vs. glass box AI

So with all this discussion on discriminative vs. generative models, why does this matter in practice? AI systems as black boxes are nothing new, as I first encountered this during my time at IBM Watson. Fast forward a decade, and the algorithms powering today’s AI systems are far more advanced and not easily understood, even by the researchers who developed them.

Much of this advancement is due to the “deep learning revolution” in 2012. As written in Ars Technica, simple (single-layer) neural networks were first developed in the late 1950s, but started to see more success in 2012 and thereafter. The main contributors to this success were a combination of:

- Deeper networks (more layers)

- Large datasets (more data)

- Powerful compute (more processing efficiency)

Why it matters: These systems can now model much more complex relationships on their own. On the one hand, this is much more efficient than explicit coding by a human being; on the other, it is far harder to decipher why the system has made the decisions it did throughout the network. In this sense, discriminative models are more interpretable than generative models, since the relationship between the inputs and outputs remains consistent.

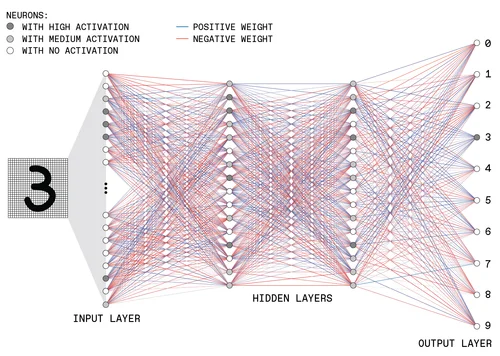

To get a bird’s eye view, let’s take a look at the complexity involved in a neural network with many layers:

Credit: Moore, S., Schneider, D., Strickland, E. How Deep Learning Works. IEEE Spectrum (September 2021).

Imagine trying to unravel this and explain it to a non-technical person, let alone someone in a completely different field like healthcare.

Some argue that these increasingly sophisticated AI (specifically generative) systems pose a risk for patient care. If an AI system makes a diagnosis, how did it come to that conclusion? Would it come to that same conclusion the next time? There appears to be some consensus that in low-stakes use cases, such as administrative tasks, black box AI is not an issue. However, there are high-stakes for clinical decision making, where accountability and liability are still not well-defined. As a result, the lack of transparency may hinder more widespread adoption of healthcare AI.

Healthcare would be better served with glass box AI, where the algorithms, training data, and the model are freely available. There is on-going development around explainable AI to help mitigate these risks, although it remains to be seen what constitutes a satisfactory explanation.

Conclusion

Exploring the black box phenomenon really opened my eyes into the technical advances and societal challenges posed by AI. As with many emerging technologies that have come before it, these are now an indispensible part of daily life. It should be a reality for healthcare, and I believe the benefits outweigh the risks. Whether that’s through regulation, education, or robust testing and evaluation, I remain bullish that these AI advances will be a boon for the industry.

References

- https://www.fda.gov/medical-devices/software-medical-device-samd/artificial-intelligence-and-machine-learning-aiml-enabled-medical-devices

- https://www.nature.com/articles/s41746-020-00324-0

- https://medicalfuturist.com/fda-approved-ai-based-algorithms

- https://medicalfuturist.com/the-current-state-of-fda-approved-ai-based-medical-device

- https://www.accessdata.fda.gov/cdrh_docs/pdf19/K192004.pdf

- https://learnopencv.com/generative-and-discriminative-models

- https://stanford.edu/~shervine/teaching/cs-229/cheatsheet-supervised-learning

- https://stats.stackexchange.com/questions/403968/linking-generative-discriminative-models-to-supervised-and-unsupervised-learnin

- https://www.cbsnews.com/news/geoffrey-hinton-ai-dangers-60-minutes-transcript

- https://arstechnica.com/science/2019/12/how-neural-networks-work-and-why-theyve-become-a-big-business

- https://colah.github.io/posts/2014-03-NN-Manifolds-Topology

- https://spectrum.ieee.org/what-is-deep-learning

- https://www.techtarget.com/healthtechanalytics/feature/Navigating-the-black-box-AI-debate-in-healthcare

- https://theconversation.com/what-is-a-black-box-a-computer-scientist-explains-what-it-means-when-the-inner-workings-of-ais-are-hidden-203888